As AI integrates into critical sectors, the focus has shifted from debating the rarionale of AI regulation to how to do it effectively. Global approaches vary: the EU favors comprehensive statutes, the US has a collage of agencies and voluntary standards, while China prefers a State-led model. These choices reflect differing views on markets, rights, risk management and State’s role. Undoubtedly, the core argument for AI regulation is building trust. Clear rules make AI predictable and accountable, encouraging adoption by ensuring safety, transparency and human oversight.

This helps prevent harms like deepfakes or discrimination.

However, regulations can pose challenges like enhanced compliance costs stifling startups, conflict amongst international rules and laws struggling to keep pace with technology. The solution should be proportionate, adaptive regulation focused on outcomes, supported by practical guidance and sandboxes to foster innovation without lowering standards.

By far, Europe’s AI Act is the clearest articulation of a comprehensive approach. It is a horizontal law designed specifically for AI and it sorts systems into four risk tiers-unacceptable, high, limited and minimal-then scales obligations accordingly. Unacceptable uses, such as social scoring and manipulative or subliminal systems are banned outrightly on fundamental-rights grounds. High-risk systems-those used in critical infrastructure like employment and education must implement risk management, robust data Governance, detailed documentation, user transparency and human oversight and conformity assessment. Limited-risk applications have to comply with basic transparency duties, such as signaling to users that they are interacting with AI. Minimal-risk systems face few obligations. General-purpose models must respect copyright and disclose training-data summaries; and when they reach the scale that creates systemic risk, they attract additional evaluation and cybersecurity requirements. The Governance design of the act envisages an EU-level office and board with national authorities and penalties commensurate with severity and firm size. However, the open questions are practical ones: sharpening the boundaries of “high risk,” keeping categories fluid as systems evolve, ensuring startups have clear compliance paths and pairing obligations with positive incentives for AI that advances sustainability, inclusion and public health. The United States has visibly chosen pluralism. A web of presidential directives; sector regulators such as the FTC, EEOC, state privacy and AI laws and voluntary industry commitments sits alongside NIST’s AI Risk Management Framework, which provides a common vocabulary for risk mapping, measurement and mitigation.

The upside is flexibility and a culture of strong ex-post enforcement that encourages experimentation and allows sector expertise to matter. The downside arguably is fragmentation: baseline protections do vary by state; companies face uneven expectations and policy can switch with change in administrations. In the absence of comprehensive federal legislation, innovators and consumers alike must navigate uncertainty about minimum obligations and recourse.

China’s model is vertical and application-specific, tightly coupled to industrial policy and information control. Rules for algorithmic recommendation and for generative and deep — synthesis services require algorithm filings, security reviews, disclosure of core functions, strict content moderation and provider responsibility for data quality. Centralisation allows for speed and uniformity and it lowers ambiguity about what is expected. But it can arguably also burden innovators, prioritise compliance over utility and reduce compatibility with international Governance norms which can complicate cross-border services and standardisation.

Between these poles sit notable “third ways.” The United Kingdom favors a principles-based approach applied by existing regulators, emphasising safety, fairness, accountability and explainability with plans to set up an AI Safety Institute. The attraction is agility and an innovation-friendly stance; the risk is in inconsistent interpretation by different regulators without a single statute. Brazil is drafting a rights — centric bill influenced by its data protection law and oriented toward EU-style risk tiers with human-rights impact assessments. Canada is aiming at high — impact AI under a broader Digital Charter and many of its core details are expected to arrive in regulations.

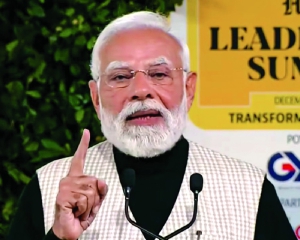

Amid this global churn, India can lead with a values — driven, operational model. Civilisational ideas-dharma (duty), ahimsa (non-harm), satya (truth) and Vasudhaiva Kutumbakam (the world as one family) — align naturally with AI ethics. Digital public infrastructure-Aadhaar, UPI, CoWIN-shows that scale, inclusion and accountability can coexist through interoperable consent frameworks, registries and audit trails. The IndiaAI Mission and BHASHINI further demonstrate how to tailor models to linguistic and cultural diversity.

India’s distinct contribution can be both practical and philosophical: turn principles into usable checklists, controls, and audits; publish model and data cards; run bias-testing protocols; enforce human-in-the-loop model for consequential decisions and maintain incident playbooks. Adoption of proportionate regulation-strict for high-stakes domains such as healthcare, welfare distribution and policing and light-touch where risks are limited-so small firms and public bodies can experiment responsibly is the way forward. Acting as a Global South bridge via inclusive data governance, capacity building and multilateral cooperation, India can leverage its civilisational wisdom to generate a dynamic Ethical AI Charter. Looking ahead, the most likely scenario is competitive convergence. Some jurisdictions will race to the top, tightening safeguards to build trust and attract quality investment. Others will compete by easing rules to draw business and capital. Over time, proven approaches tend to travel through market forces and public procurement, much as data-protection norms are spreading via the “Brussels effect.” No single model can fit every context. Durable Governance will be polycentric-with multiple actors who know their roles-and outcome-based, focused on measurable harms and benefits rather than box-ticking.

The next wave will be defined less by grand proclamations than by practical tools. Regulatory sandboxes and testbeds will allow supervised trials of high-risk use cases before full deployment. Outcome-based regulation will set clear targets for safety, equity and robustness while allowing different compliant methods, encouraging innovation in how goals are met. Assurance ecosystems will mature, with independent auditors, public benchmarks, transparent model cards and meaningful incident reporting to validate claims in practice. Authorities will build specialised technical capacity, borrowing from aviation and nuclear safety, to investigate complex systems and coordinate cross-border incidents. And truly inclusive processes-those that center vulnerable groups and affected communities-will strengthen legitimacy, which is as crucial to adoption as technical efficacy.

At this moment, AI Governance is a strategic capability. The jurisdictions that pull ahead will pair proportionate, adaptive and testable rules with credible enforcement and practical toolkits. They will insist on transparency, fairness, safety, privacy and human oversight and they will show their work with audits and outcomes that people can understand and trust. With digital scale, standards engagement and a values-based frame that is both ancient and entirely contemporary, India can help shape a genuinely global settlement-one that keeps technology an assistant of humanity, not its master.

The author is Director (Finance), Department of Telecommunications. The views expressed are personal